Introduction

In the first blog article about Db2 Hybrid HADR clusters, we defined what exactly “Hybrid” means, how it differs from standard HADR clusters and – most importantly – how a Hybrid HADR cluster can be set up, using the latest Db2 releases.

In this sequel article, we will look more closely at the security aspects of Hybrid HADR cluster implementations and what can be done to improve those.

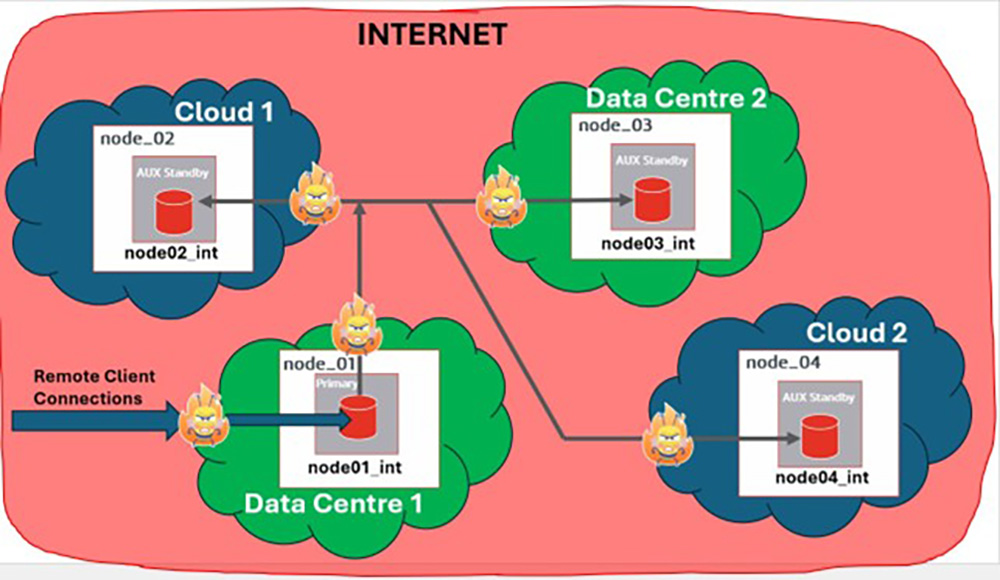

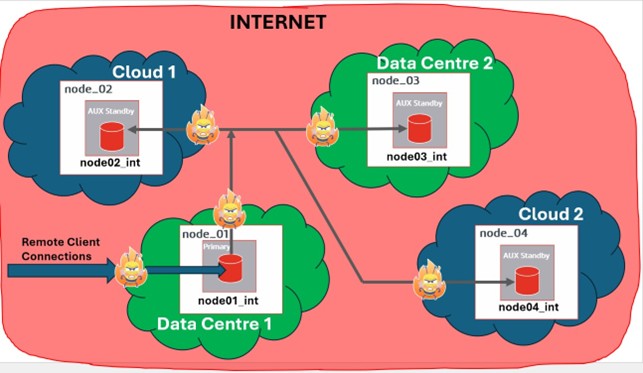

The following diagram, depicting a Hybrid HADR cluster with nodes spread across different cloud providers, can give us a better understanding of where and how our data can be exposed to the outside world (i.e. to the open Internet):

First of all, let’s postulate that each Cloud provider (as well as any on-premises Data Centres) provides a robust and safe environment, completely isolated from the rest of the Internet.

And we have every right to do so – all major Cloud providers take their cloud environment security very seriously – just take a look at their security specs: Azure, AWS, GCP, IBM.

Therefore, we can conclude with a reasonable level of confidence that the data, residing in the databases within various Cloud environments (and on-prem Data centres, too?), is quite sufficiently protected from the outside world by default.

This leaves us with the communication channels between the HADR nodes. Because in Hybrid clusters the HADR nodes are remote by definition, and each one resides in a different – and isolated – Cloud (or on-prem) environment, they will have to use some form of Inter-linking infrastructure to communicate with each other.

In its simplest and also cheapest form (which I also happened to use for my R&D cluster), the remote HADR nodes will communicate with each other using public Internet, which is by default open and insecure. And if you don’t encrypt your communications (i.e. “Data in Transit”), practically anyone along the way will be able to read your data. And, by default, HADR does not encrypt its communication channels.

So, we have a problem there…

There are basically two ways of resolving the “Data in Transit” security issue in Hybrid HADR clusters:

- Use dedicated links between HADR nodes

- Encrypt the HADR communications

The first option means using private (leased) Internet links, that no one else (in theory) has access to, or even deploying your own hardware (optical cables, routers, …) between the HADR nodes, to be absolutely sure your network traffic is private.

This is definitely the more expensive option, and can pose significant logistical challenges, depending on where the remote HADR nodes are located and how far apart they are from each other.

Obviously, this was (and is) way above my R&D budget, so I couldn’t do any testing of these advanced options.

The second option – encryption – comes at no additional cost with Db2 HADR, works equally well regardless of the distances involved and offers excellent levels of protection, so that is the one we will explore in this article.

How To Set Up TLS Comms in a HADR Cluster

Just to recap, following is the current configuration in our R&D Hybrid HADR cluster, consisting of three nodes – two in the AWS cloud environment and one in the Azure cloud environment:

Hosts file

On each node, the internal and external IP addresses, together with the logical host names, are defined in the /etc/hosts file:

# DB2 HADR nodes, including internal and external IP addresses for all nodes:

#

10.1.0.4 db2_azure_aux_int

20.82.105.251 db2_azure_aux_ext

#

172.31.0.12 db2_aws_node01_int

3.14.228.248 db2_aws_node01_ext

#

172.31.9.6 db2_aws_node02_int

18.116.135.129 db2_aws_node02_ext

#

HADR Configuration (before TLS)

Following is the current HADR configuration, before setting up the TLS (just showing the most relevant parameters here):

AWS_node01:

HADR local host name (HADR_LOCAL_HOST) = db2_aws_node01_int|db2_aws_node01_ext

HADR local service name (HADR_LOCAL_SVC) = 50100

HADR remote host name (HADR_REMOTE_HOST) = db2_aws_node02_int

HADR remote service name (HADR_REMOTE_SVC) = 50100

HADR rem. instance name (HADR_REMOTE_INST) = db2inst1

HADR target list (HADR_TARGET_LIST) = db2_aws_node02_int:50100|db2_azure_aux_ext:50100

HADR log write sync mode (HADR_SYNCMODE) = NEARSYNC

AWS_node02:

HADR local host name (HADR_LOCAL_HOST) = db2_aws_node02_int|db2_aws_node02_ext

HADR local service name (HADR_LOCAL_SVC) = 50100

HADR remote host name (HADR_REMOTE_HOST) = db2_aws_node01_int

HADR remote service name (HADR_REMOTE_SVC) = 50100

HADR rem. instance name (HADR_REMOTE_INST) = db2inst1

HADR target list (HADR_TARGET_LIST) = db2_aws_node01_int:50100|db2_azure_aux_ext:50100

HADR log write sync mode (HADR_SYNCMODE) = NEARSYNC

Azure_node:

HADR local host name (HADR_LOCAL_HOST) = db2_azure_aux_int|db2_azure_aux_ext

HADR local service name (HADR_LOCAL_SVC) = 50100

HADR remote host name (HADR_REMOTE_HOST) = db2_aws_node01_ext

HADR remote service name (HADR_REMOTE_SVC) = 50100

HADR rem. instance name (HADR_REMOTE_INST) = db2inst1

HADR target list (HADR_TARGET_LIST) = db2_aws_node01_ext:50100|db2_aws_node02_ext:50100

HADR log write sync mode (HADR_SYNCMODE) = NEARSYNC

TLS Configuration

So now, in order to enable the TLS communications – i.e. encryption – between the HADR nodes, we must do the following:

(all following commands are executed under the Db2 instance owner account db2inst1, the owner of the Hybrid HADR database, SAMPLE)

- Create a key database on all HADR nodes to manage digital certificates

The following commands, executed on Node01, will prepare for and create a key database (or a keystore – called “KeyStore01”), as well as a secure stash file which will store the encrypted password (“<myPwd>”):

mkdir /home/db2inst1/keystore

chmod 700 /home/db2inst1/keystore

cd /home/db2inst1/keystore

gsk8capicmd_64 -keydb -create -db "KeyStore01.kdb" -pw "<myPwd>" -stash

The above is repeated on the rest of the HADR nodes, preferably with different keystore names and passwords (even though it would work using the same names and passwords across all nodes, as the keystores are not related or shared between the nodes, but that’s hardly a recommended practice in any security book).

- Create self-signed digital certificate(s) and extract them into plain files, for sharing btw nodes

The sample commands – for Node01 – are as follows, where the first command creates the digital certificate (within the just created keystore “KeyStore01”) and the second extracts it to an ASCII file:

gsk8capicmd_64 -cert -create -db "KeyStore01.kdb" -stashed

-label "Node01_Signed" -dn "CN=TritonRND.HybridHADR.Node01"

gsk8capicmd_64 -cert -extract -db "KeyStore01.kdb" -stashed

-label "Node01_Signed" -target "Node01_Signed.arm"

-format ascii

The “-stashed” option instructs the GSK command to pull the password from the stash file (both created in the previous step).

The “-label” option gives the newly created digital certificate a name (“Node01_Signed”).

The “-dn” option defines the “distinguished name that uniquely identifies the certificate”.

The “-target” and “-format ascii” options specify the extracted certificate file name and format (we use ASCII here).

These commands are also repeated on the rest of the HADR nodes, taking care to give each digital certificate a unique name (at least within the HADR cluster, as the certificates will be shared across all nodes).

- Transfer the extracted certificate files (*.arm) to all (other) nodes and import them there

Next, we must transfer the extracted certificates from each node to all other nodes. We can do that with the standard SCP command, or by using any other command/utility suitable for that purpose.

For example, to transfer the digital certificate from Node_01 to Node_02, I used a command like:

scp /home/db2inst1/keystore/Node01Signed.arm

db2inst1@node_02:/home/db2inst1/keystore

To import the digital certificates that we’ve just transferred from other nodes, we can use the command like this one (where we substitute “XX” and “YY” with appropriate values):

gsk8capicmd_64 -cert -add -db "KeyStoreXX.kdb" -stashed

-label "NodeYY_Signed" -file " NodeYY_Signed.arm"

-format ascii

When the transfer/import work is done on all HADR nodes, we can check the local keystores to confirm all digital certificates are there with the following command (this example is for Node_01):

gsk8capicmd_64 -cert -list all -db "KeyStore01.kdb" -stashed

Certificates found

* default, - personal, ! trusted, # secret key

! Node02_Signed

! Node03_Signed

- Node01_Signed

Here “Node01_Signed” is the “local” or “personal” certificate that was created in the local keystore on Node01 (KeyStore01).

The other certificates (Node02_Signed and Node03_Signed) were copied from remote HADR nodes (Node02, Node03) and imported into the local keystore – and these are marked as “trusted” (sign “!”).

- Configure the Db2 instance for TLS support.

The next step is done by setting the SSL_SVR_KEYDB and SSL_SVR_STASH DBM configuration parameters on all HADR nodes, and then restarting the Db2 instance:

db2 update dbm cfg using SSL_SVR_KEYDB /home/db2inst1/keystore/KeyStoreXX.kdb

db2 update dbm cfg using SSL_SVR_STASH /home/db2inst1/keystore/KeyStoreXX.sth

db2stop

db2start

(here again, “KeyStoreXX” is substituted with a proper keystore name on each HADR node)

Optionally, the SSL_VERSIONS DBM configuration parameter can be set as well:

db2 update dbm cfg using SSL_VERSIONS TLSV13

db2stop

db2start

(note: if this configuration parameter set, it is recommended to set it to the same value on all HADR nodes)

- Configure TLS for the HADR database.

This is done by setting the HADR_SSL_LABEL database parameter, on all HADR nodes:

db2 update db cfg for SAMPLE using HADR_SSL_LABEL NodeXX_Signed

(once again, the value “NodeXX_Signed” must be substituted with a personal certificate on each HADR node)

Start HADR Cluster

At this point, the TLS encryption should be completely configured in the HADR cluster and ready to use!

So, let us now restart the HADR cluster (with the AWS Node02 acting as the current primary) and then check the HADR status to confirm the TLS is indeed active:

AWS Node02, current primary

AWS Node01, current main standby:

Azure Node, current AUX standby:

All three of the above screenshots show the HADR_FLAG value SSL_PROTOCOL which confirms the TLS/SSL encryption is active between all HADR nodes, just what we wanted to see!

(just as a reminder, if your HADR cluster were using default settings, i.e. plain TCP/IP with no encryption, you would see the flag value TCP_PROTOCOL, as I demonstrated in my first blog article)

Note: there is also further evidence (of using TLS/SSL) to be found in the Db2 diagnostic log, but I will leave that bit for you to explore 😊

So, what about data encryption at rest?

Even if I tried to argue (above) that data encryption at rest isn’t absolutely necessary for Hybrid HADR clusters, by no means did I try to say it should never be done. Quite the contrary, encrypting the data at rest is an important step forward in data security, and everybody should at least consider the pros and cons of doing it (or not doing it) for themselves!

It is just that I haven’t done it in my R&D project yet, so I cannot blog about it just now…

But the good news is that it shouldn’t be very difficult to make that extra step, since all you have to do is:

- Create a keystore (if none exist, or use the same one we created above, for TSL comms)

- Configure the DB instance with the new keystore (again, only if not already done)

- Take a full DB backup of your (unencrypted) database

- Drop your (unencrypted) database

- Restore the DB backup into a new and encrypted database

(for HADR clusters, this means restoring the same backup image onto all HADR nodes and then restarting HADR and letting the standby nodes sync with the primary).

Time permitting, I will try to do this at some point as well and then cover the procedure in a short follow-up blog article.

To Be Continued…

In my first blog article, I promised the following:

In the second part of this blog series, we will extend our Hybrid cluster to include the GCP as well and will spread our test HADR nodes across all three major cloud providers: AWS, Azure and GCP!

Time (and resources) allowing, we will throw one on-premises node into the mix as well.

However, having done a bit of R&D around the security, I decided to write a blog about that topic first, because I think it is very important for any HADR cluster – and particularly for the Hybrid HADR clusters whose parts (communication lines) are exposed to the public Internet!

Finally, I cannot say that I haven’t done what I had promised already, albeit with a little help from my colleagues – included a GCP (and even an IBM Cloud!) node into my R&D Hybrid Cluster… it’s just that I haven’t found the time to write an article about it yet, but I will try to do so as soon as possible (again, with a little help from my colleagues – those same ones who helped me set it up).

So, once again, stay tuned!