INGEST Part 2 – Marriage with Temporal Data

Part 1 was a basic illustration of how INGEST can be used to provide a high-availability data replication mechanism without employing expensive and complicated ETL. In Part 2, I’m going to try and explore a few more complicated options: enriching or extending the data as it is ingested.

One ‘nice-to-have’ that INGEST can deliver is to maintain data in a temporal fashion at your target location. You probably don’t want the extra overhead of System or Business time data being stored on your source OLTP system but, using the mechanism shown in Part 1, we can keep an up-to-date and performant copy of the data with the extra functionality of Business time travel queries being available at the target location.

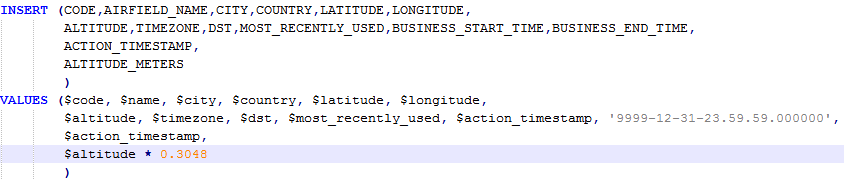

We can also enrich the data with different representations or storage values to obviate the need for expensive calculations at query execution time. For instance, if a base column stores a value in feet, rather than calculating a figure in metres every time you access it, you can include the following code:

Now, there are a few provisos with this approach and it’s going to involve some extra complexity in the DB2 definitions on the target database side. But, once they’re defined, the INGEST process will invoke all the required definitions and will provide a procedure that obviates ETL and is robust, performant and maintainable.

First the provisos:

1. INGEST can only invoke a single statement in each WHEN clause of the MERGE

2. INGEST cannot process SYSTEM TIME

3. If you define an INSTEAD OF trigger for a view, you must define one for delete, update and insert operations (Why is this relevant? Well, keep reading!)

Keeping Temporal Data

The aim of this exercise is to keep temporal, historical data in our target database, without burdening the source database with that extra storage and processing overhead. So, when you:

• INSERT data in the source table, the target table needs to record when that data became active.

• DELETE data in the source table, you need to ‘soft delete’ on the target table by recording the date that the data became inactive

• UPDATE data in the source, you need to perform 2 operations: a ‘soft delete’ of the old version of the data and an Insert of the new version of the data

To accomplish this I’m going to build on the structures shown in Part 1. The source data is still identified and written to a pipe of flat file and delivered to the source database with columns including the action that was taken (U for Update, D for Delete, I for Insert and a timestamp to show when that action took place).

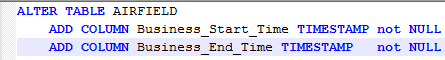

The target AIRFIELD table has been modified to include a BUSINESS TIME definition

Source Data

On the source database, an INSERT, UPDATE and DELETE will take place.

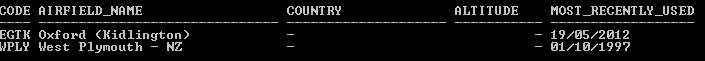

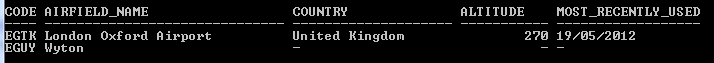

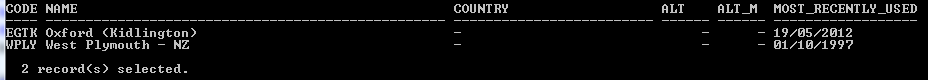

Before they do, this is the relevant data from the source table:

And this is the same view after the changes

You’ll notice that WPLY (West Plymouth) has been deleted, EGUY (Wyton) has been inserted and the name of EGTK has been changed. This will cause the following data to be written by the trigger(s) to the staging table:

Click image to enlarge

INGEST commands

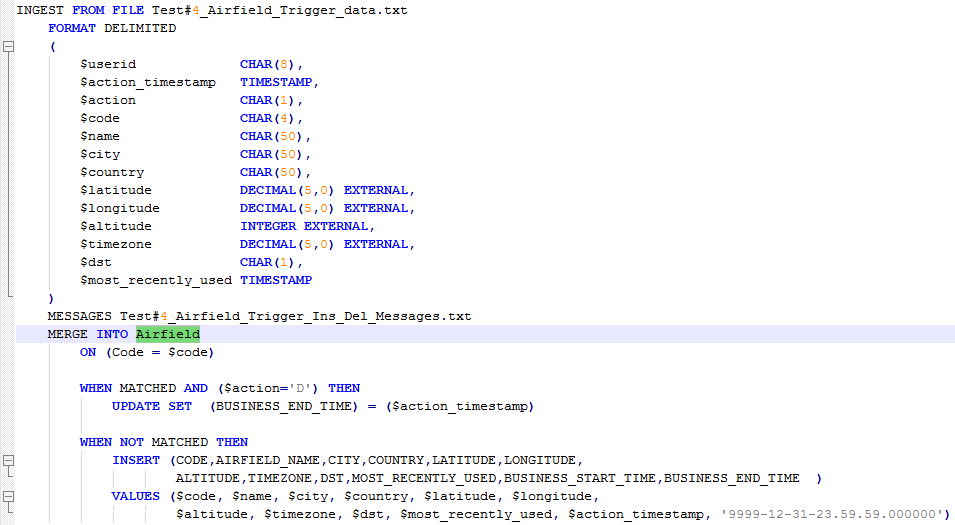

When it arrives at the source database, it becomes subject to two INGEST commands

This first one is very similar to what we saw in Part 1: when the primary key CODE is not matched then an INSERT is performed, but this time, with the Action Timestamp being used to populate the Business Start Time. The Business End Time is defaulted to end of time.

When the key is matched and the action code is ‘D’ (for delete), then a soft delete is performed, by updating the Business End Time with the Action Timestamp.

NB: these operations are performed directly against AIRFIELD

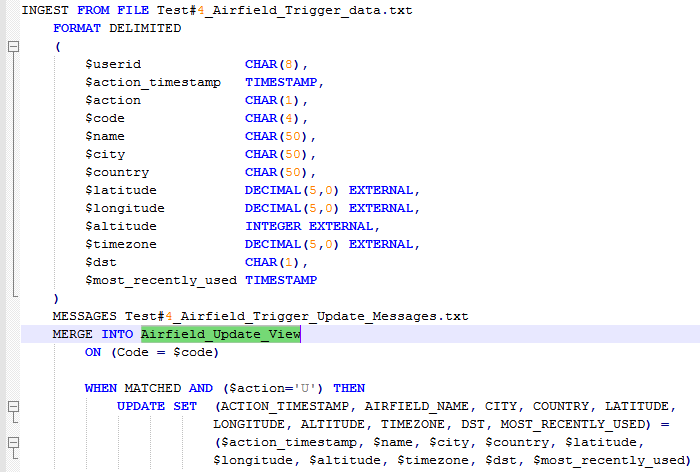

There is also a second INGEST command in the same script to deal with the UPDATE data (remember point 1 in the Provisos at the start of this blog?):

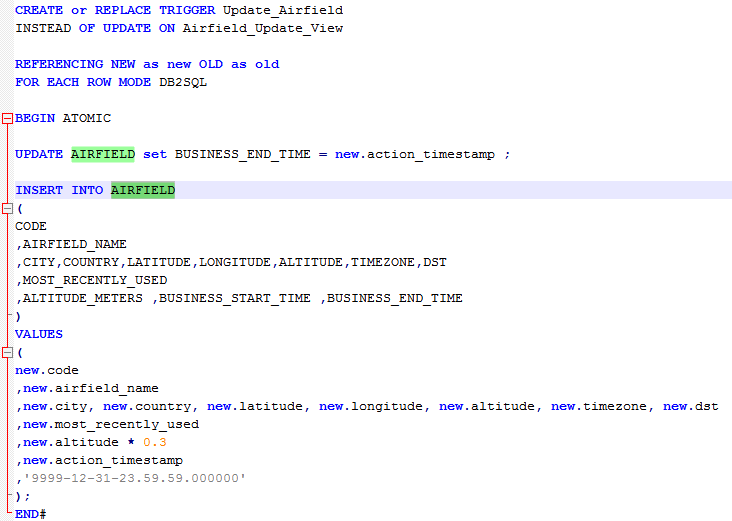

Because this INGEST command has to perform 2 operations (an Update to the existing row to close off the Business Time and an Insert of a new row with the new data), it has to refer to a View of the AIRFIELD table (which is nothing more than a SELECT * of the base table), and that view will have an INSTEAD OF TRIGGER defined on it:

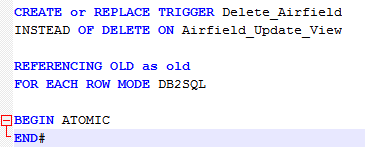

This facilitates the 2 required operations: the UPDATE to close off or “soft delete” the existing data, and an INSERT to supply the updated data as a new row. (But remember Proviso 3 at the start of this blog: if you define a Trigger for an INSTEAD OF UPDATE, you must also define one for INSERT and DELETE, even if you don’t want them. Just use a definition like this to keep DB2 happy)

Target data

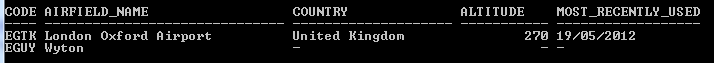

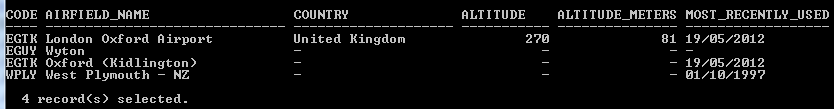

So, if you remember, after the UPDATE, INSERT and DELETE operations on the source table, the data looks like this:

The target data, however, will look like this:

With the addition of Business Start and End time data:

You can see that the deleted row is still available and the pre and post Update data is also still visible. Without delving too far into the operation of temporal data (but see my previous blogs https://www.triton.co.uk/time-travel-gotchas-part-1/ for some thoughts on this), this will allow the data in the target database to be interrogated in a historical fashion that will no longer be available on the source database.

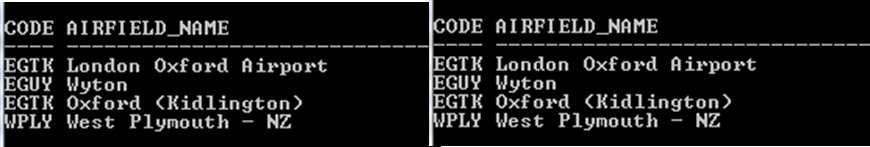

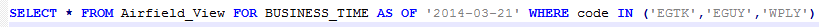

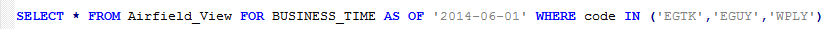

The query below shows what the data was on the 21st march

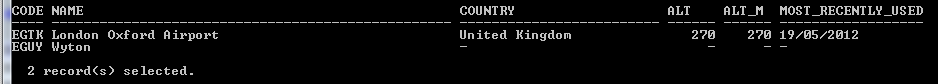

And the same query, for the 1st June, shows a different picture altogether

Overly complicated?

The above approach of using INGEST to maintain temporal data may seem a bit convoluted, but it’s no more than leveraging existing DB2 functionality and driving it with an INGEST command. Your existing DB2 DBA (or developers, for that matter) can build the necessary objects. You can dispense with ETL tools, man-power and maintenance and you can provide the analytical depth of functionality that features such as Time Travel Query offer.

Existing, familiar features like TRIGGERS and VIEWS can be exploited alongside INGEST by anyone familiar with DB2, to provide a robust, easily maintained and performant solution that can supplant third-party ETL tools.

Next time I’ll try and show that this fairly complex operation doesn’t mean a performance impact and outline a few of the gotchas you might come across

Watch the replay of the DB2Night Show featuring Mark Gillis talking about INGEST as an ETL Tool.

« Previous | Next »