Introduction

We are living in an era where Internet Clouds are playing an increasingly central role in every company’s IT infrastructure design.

Even if a company hasn’t moved their servers, application services and databases to the Cloud yet, it still makes sense to think (twice) about storing at least the database (and other) backups on the Cloud, for a variety of reasons:

- Physical separation (if your on-site data centre burns down…)

- Excellent availability (“Amazon S3 is designed for 99.999999999% (11 9’s) of durability“)

- Ease of access (can be accessed from anywhere on the Planet, literally)

- Zero maintenance (it’s a Cloud provider’s worry to keep everything up and running!)

- Total Cost of Ownership (TCO – can be significantly lower than on-site)

The goal of this test is to enable the DB2 backups to be stored directly onto AWS S3, one of the leading Cloud providers today.

This will be done by setting up a DB2 Storage Access Alias in a local DB2 instance (local meaning in the local data centre, not in AWS) which will enable the usage of the DB2REMOTE option in a DB2 BACKUP commands.

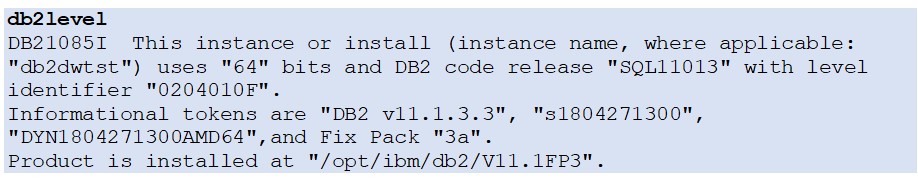

A Linux server (RH 7.1) with preinstalled DB2 v11.1 will be used for this purpose.

Set up

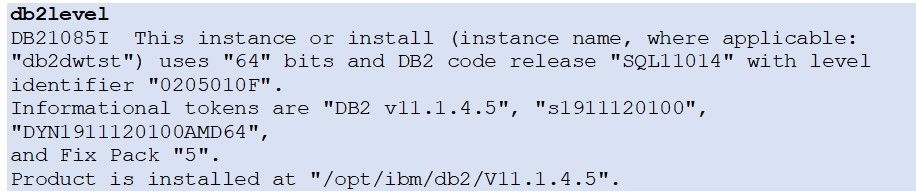

The current DB2 instance level:

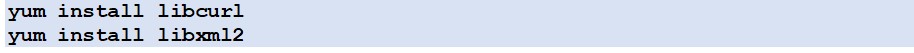

Install the prerequisites:

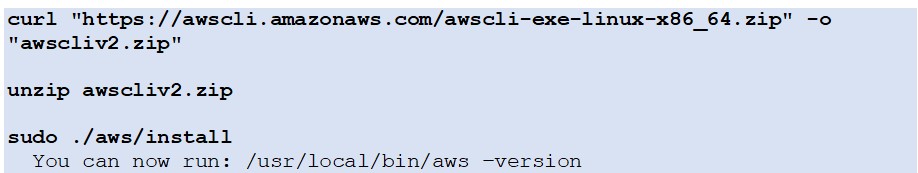

Set up the AWS CLI:

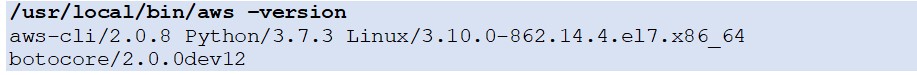

Check version:

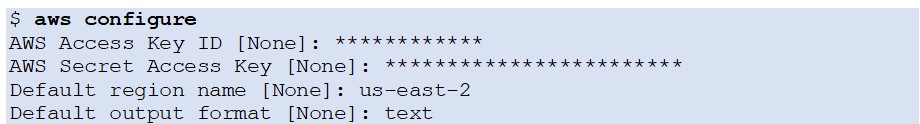

Configure the AWS CLI:

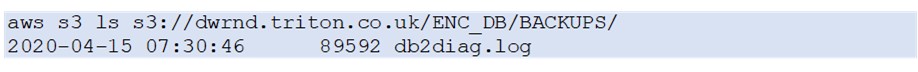

Test the access:

Works fine!

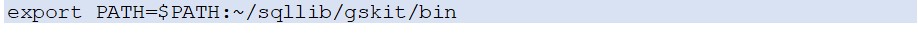

Must add this to .bashrc first (as missing in db2profile?!?):

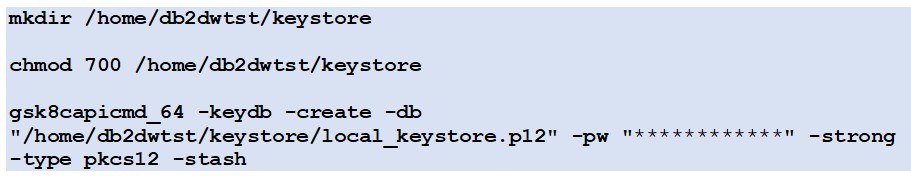

Create the local keystore:

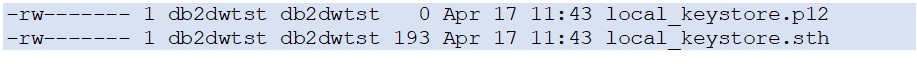

Keystore password stash file correctly updated:

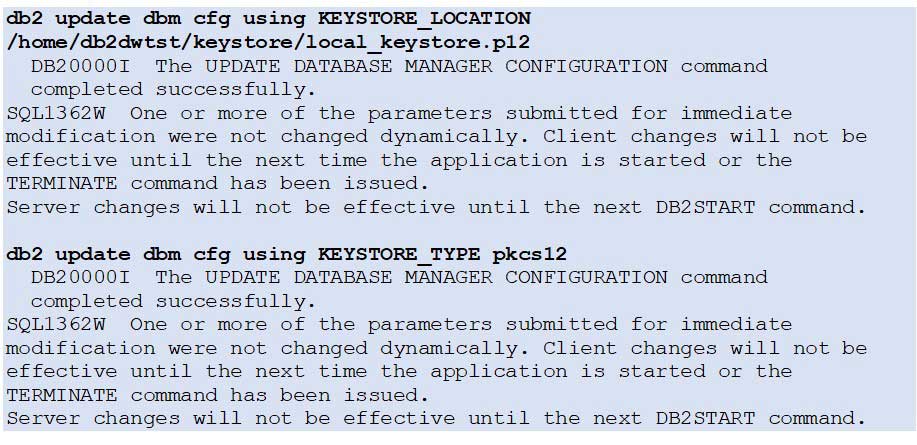

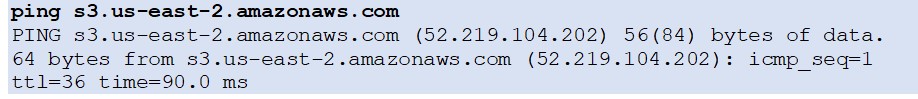

Configure DB2 to use the keystore:

Restart the instance:

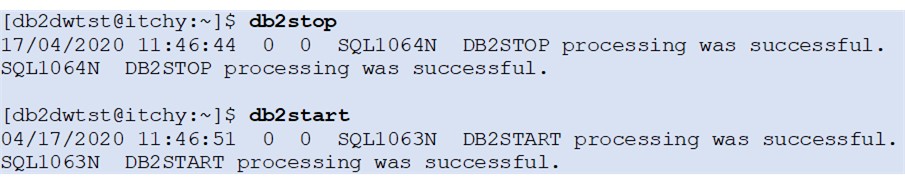

Check the S3 endpoint:

OK!

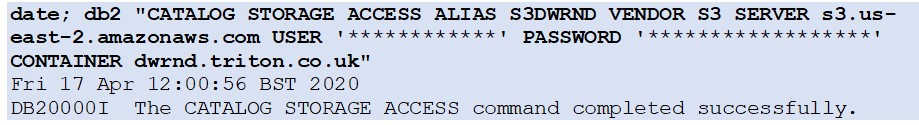

Catalog the storage access alias:

The keystore database file (p12) is updated:

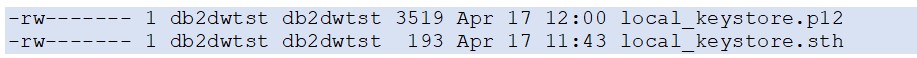

List the defined storage access:

OK!

Test

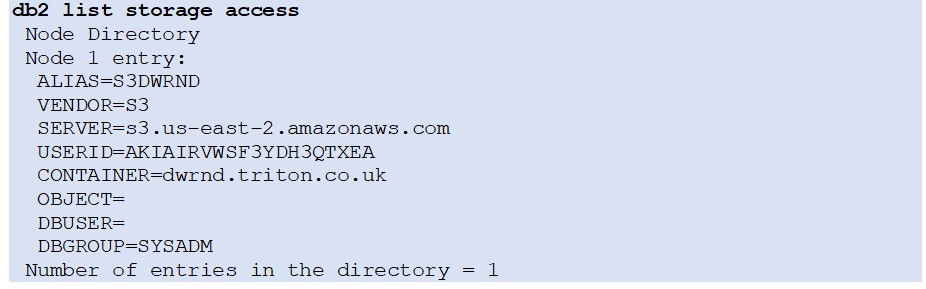

Backup a database to the S3 storage alias:

Error, let’s investigate:

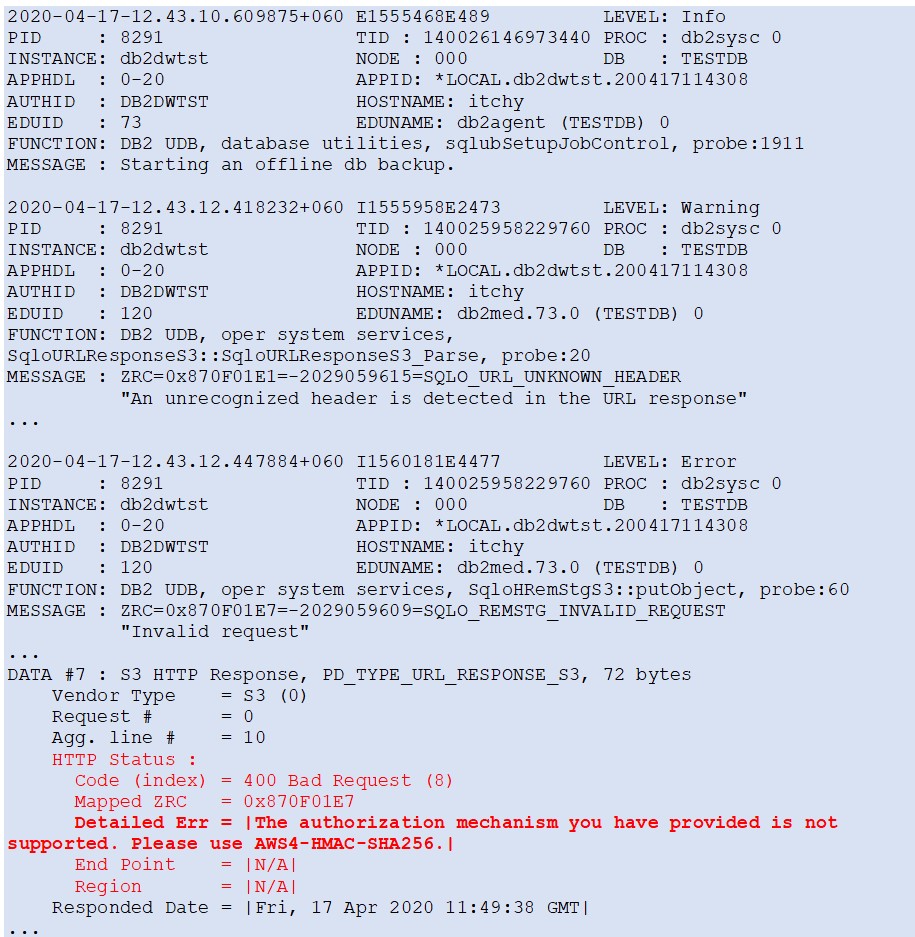

From the DB2DIAG.LOG (important bits in red colour!):

Need to set up the AWS4 (v.4) authentication!

According to the article “Using the AWS SDKs, CLI” (https://docs.aws.amazon.com/AmazonS3/latest/dev/UsingAWSSDK.html),

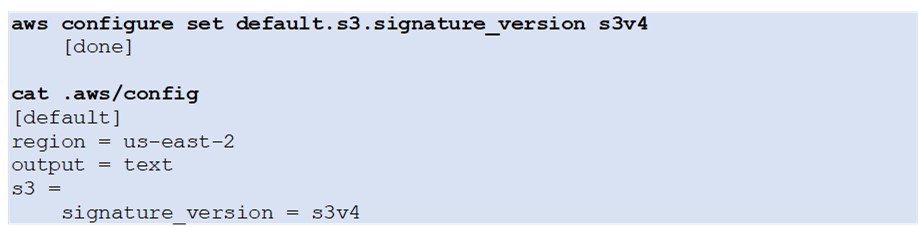

AWS4 authentication for the AWS CLI is set up with:

(this alone didn’t help… the same errors appeared in the db2diag.log

…despair not but read on!!)

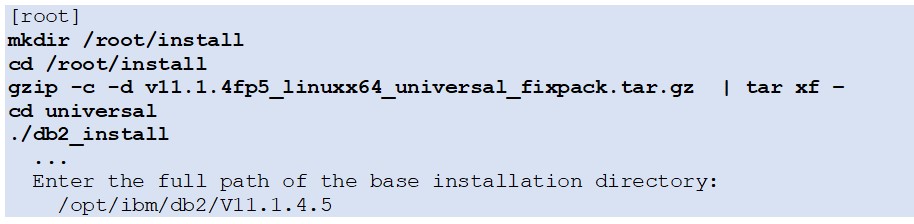

However… DB2 KC specifically says the whole DB2 instance must be upgraded to v11.1.4.5 to enable the AWS4 authentication:

New Amazon S3 support

Starting in Version 11.1 Mod Pack 4 and Fix Pack 5, support for AWS S3 signature version 4 is available.

So…Install Fixpack 5:

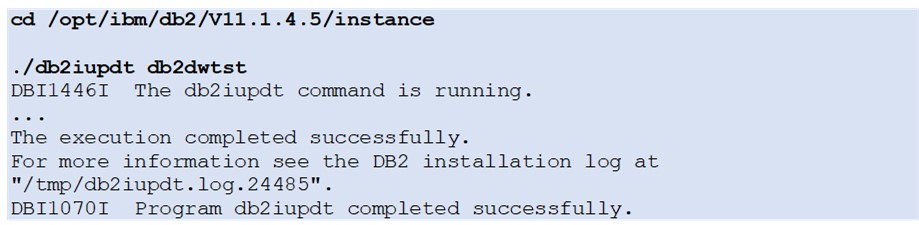

Update the DB2 instance db2dwtst:

New DB2 instance level:

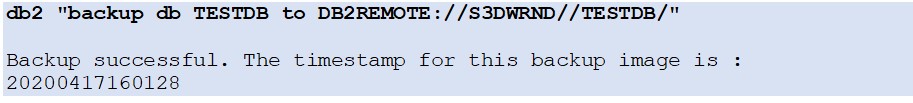

Let’s try the backup to S3 again:

Hooray, success!!

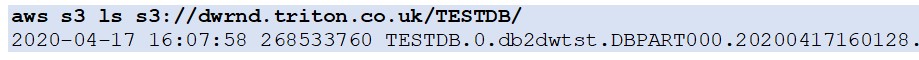

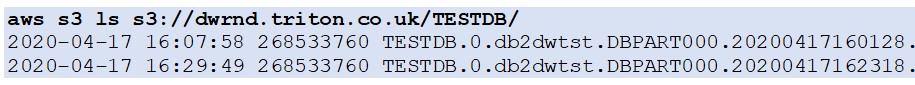

Check the backup image file on S3:

All good!

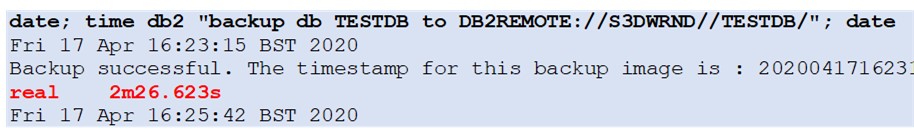

Time the backup:

Check the backup images, again:

Conclusion

After installing the OS prerequisites and setting up the required AWS4 authentication (together with the appropriate DB2 FixPack level), the DB2 backups can be done directly to the S3 storage using the DB2REMOTE storage alias!

The speed of the backup will of course depend on the network speed between the local data centre and AWS S3.