In the second blog in this series (Messing with JSON data in Db2 part 2), I suggested that I was going to “go through the same processes and use the same data but do it in MySQL”. So, this is Part 3, but using a different flavour of database.

In essence, I wanted to satisfy myself that I can:

- take the same body of data ( a set of JSON docs relating to FitBit activity data )

- load it into my database

- post-process it (build additional columns with reformatted data values) and then

- export it to a flat-file and

- load it into Excel and view aggregated data.

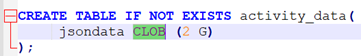

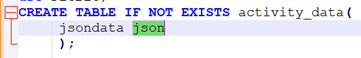

Define the table

Almost, but not quite the same. In the Db2 table I defined the column.

Db2 doesn’t have any native JSON storage but MySQL does, so the definition is

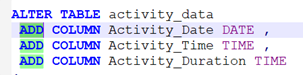

I also need three columns to accommodate the ‘post-processing’ I’m going to do, and that syntax is identical.

(that could, of course, be added in at the CREATE TABLE stage but I kept it as a separate command in order to address it as a further bit of processing).

Getting the data in

In the first part of the Db2 series (Messing with JSON data in Db2) I showed how I combined a number of .json files into a single ‘unformatted’ file (using a Python routine) and then ran a simple IMPORT command to put the data into the JSONDATA column of my ACTIVITY_DATA table.

I couldn’t get anything comparable to work in a script (if I have missed a trick here, please let me know).

What I have ended up with is an execution of the importJson utility via the MySQL shell. Here is what I run (although this is a single command submitted as one-line, I’ve spaced it out on to separate lines in order to make the explanation simpler)

mysqlsh root@localhost:33060/fitbit

-- util importJson

"source_folder/exercise_unformatted.json"

--table=activity_data

--tableColumn=jsondata

The first line invokes the MySQL shell and passes the connection information in the format

user@host:port/database

The second line invokes the importJson utility and then the next three parameters are

-

location of the source data (which is the unformatted JSON produced by my Jupyter iPython routine)table name andcolumn within the table

What I get from that is a prompt for the password, then some confirmation info about what I am doing, and then a result:

![]()

Post-processing

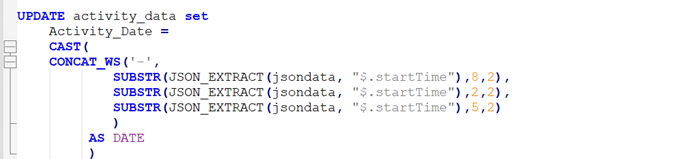

Similar to what I had to do in Db2; I need to populate my 3 non-JSON columns with relational data, in a format that my database understands, so that the data can be used in some aggregation queries. In Db2 that was a fairly complex UPDATE statement; I’m afraid it is a similarly convoluted piece of SQL for MySQL.

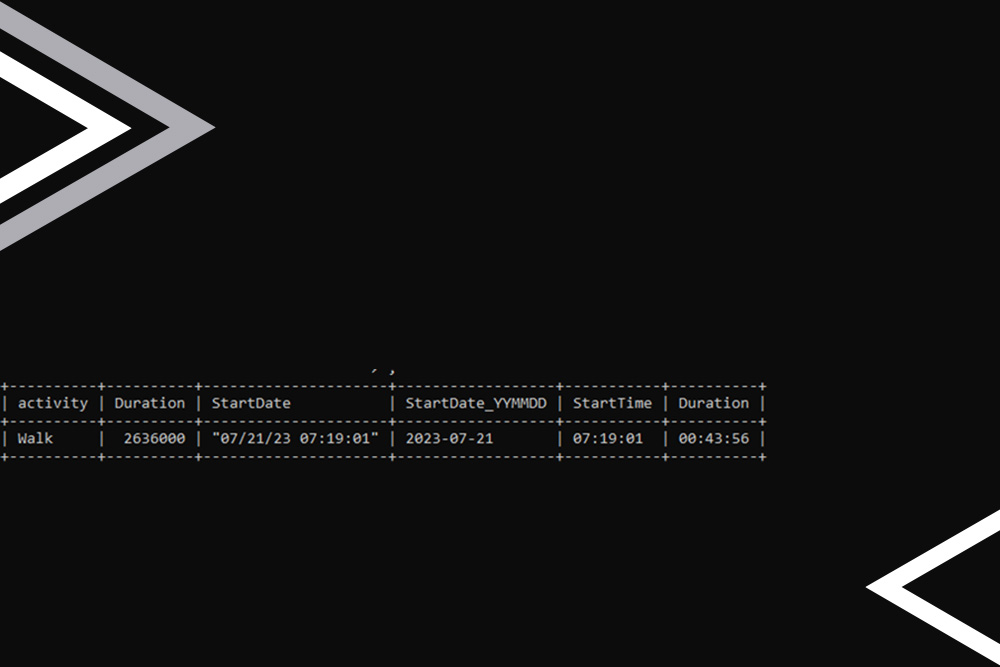

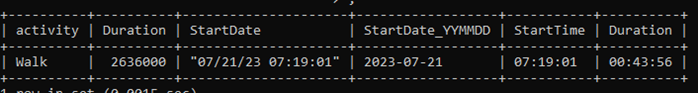

What I need to do is to take the raw data (Duration and StartDate) and build a recognisable start date and time and to convert the duration into an hours and minutes expression:

The first bit

reorders the JSON date format DD/MM/YY and turns it into YYYY-MM-DD and uses the CONCAT_WS to put hyphens between each component (nice feature).

Then a simple CAST to turn the time part of the StartDate string into an actual TIME Data Type column

![]()

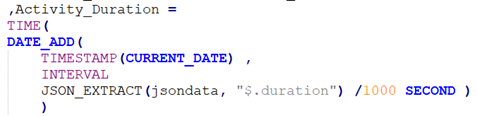

And then a similar expression to that in Db2, although syntactically different, to

-

-

- find the timestamp for the start of today

- convert the duration from milliseconds to seconds

- add 2) to 1)

-

Getting the data out

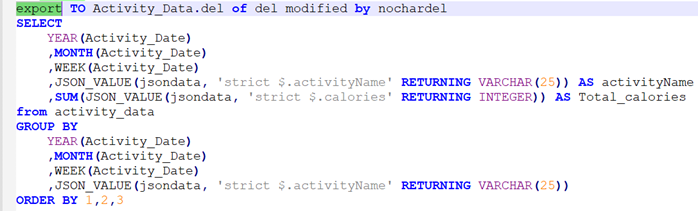

In Db2 this was a simple EXPORT followed by a straight-forward SELECT and GROUP BY:

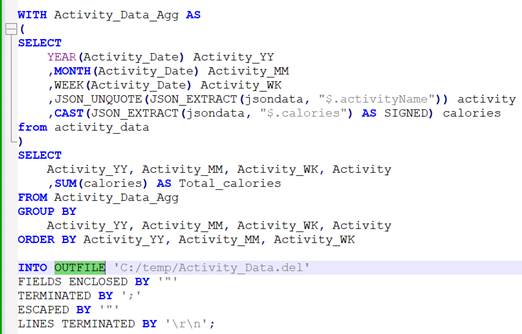

The main differences with MySQL were that I had to use a Common-Table Expression (again, any MySQL gurus out there with better ideas; please shout) and the data is written out using an OUTFILE clause plus options

But the nett effect is the same; a comma-delimited file that can be imported into Excel.

Conclusion

Nothing earth-shattering to conclude here. I just wanted to confirm that databases can deliver the same solution. It would be a bit horrifying if they couldn’t but also, notwithstanding the oft-repeated claims about SQL compatibility, I wanted to examine the Db2 syntax and that for MySQL and determine what the differences were.

I often think it’s a good exercise to compare and contrast products and taking the same source and aiming to produce the same results is one way to exercise that scenario thoroughly.

I’m a Db2 guy so I don’t pretend to offer anything other than ‘one of the possible solutions’ for MySQL here. If anyone wants to make suggestions or offer better ways of doing this, please email me at mark.gillis@triton.co.uk

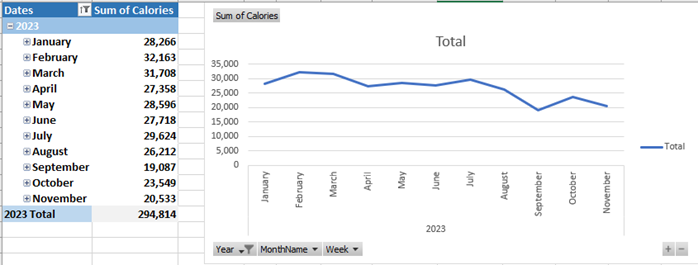

And I suppose my final take-away from this exercise is (looking at the trend in that Calories chart), I need to up my game a bit with respect to my cardio-vascular exercise.